vLLM Deep Dive I: Intro and Environment Setup

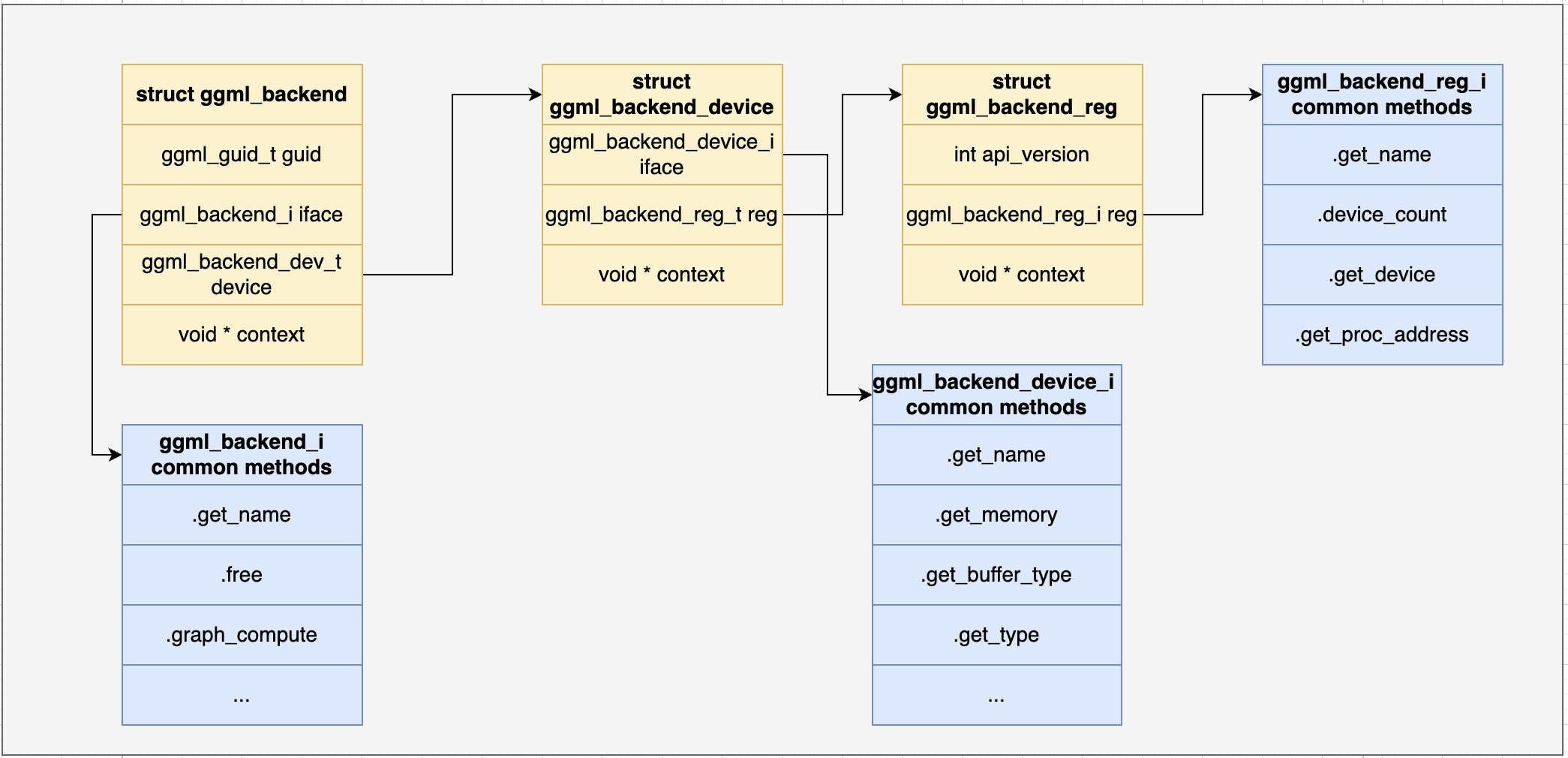

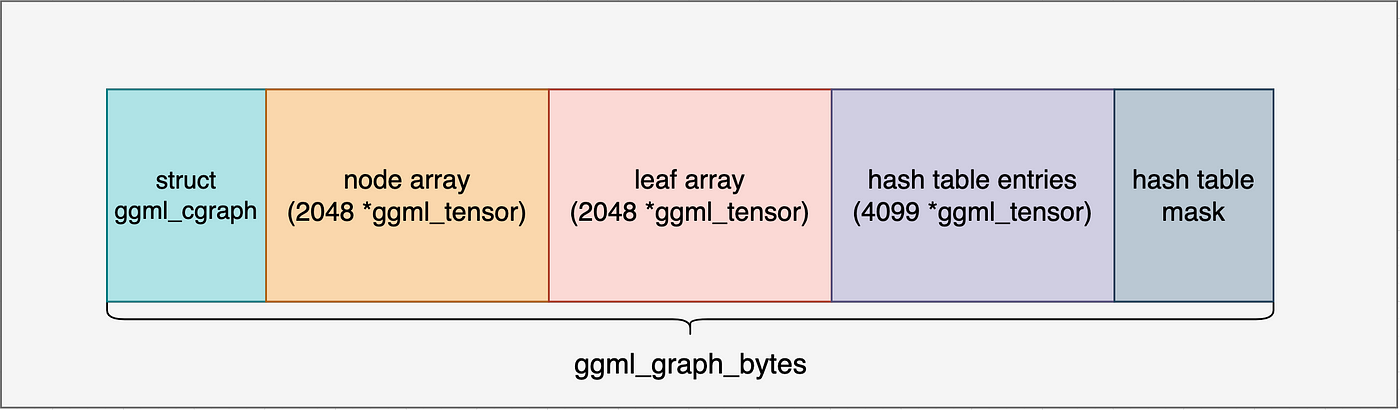

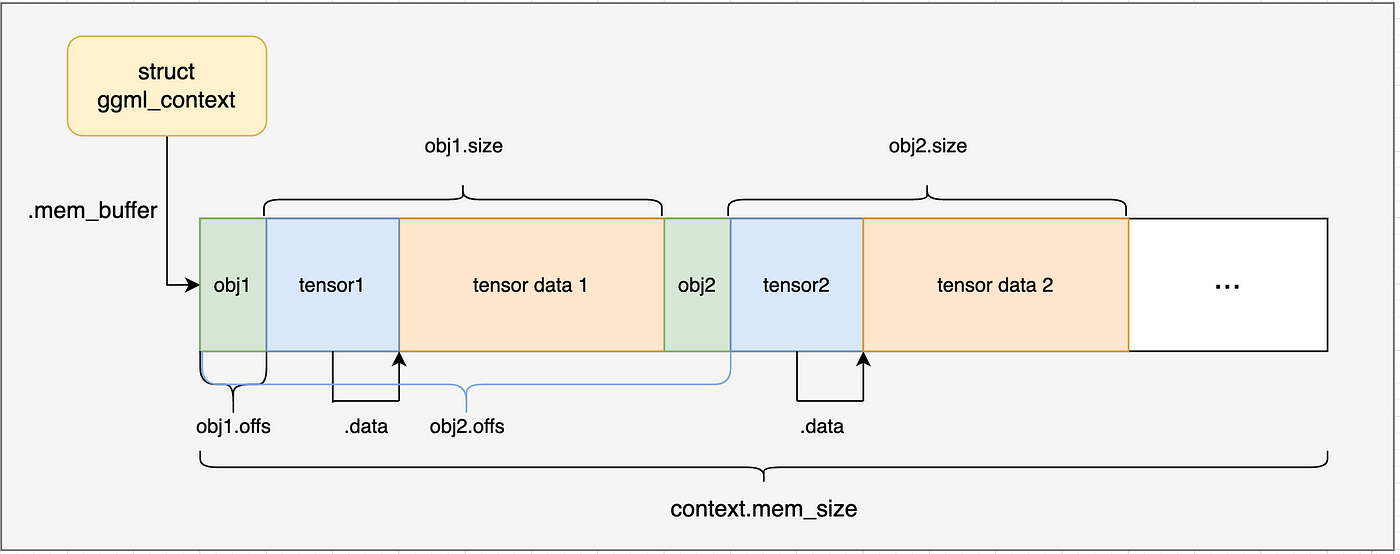

Introduction It’s been a while since my last blog series on GGML. GGML is an excellent framework for running LLMs on-device — laptops, phones, and other resource-constrained environments — thanks to its pure C/C++ implementation and zero third-party dependencies. That makes it easy to use when performance and portability matter. That said, its design isn’t meant for industry-scale serving, where you need to manage thousands of high-end GPUs reliably. Recently, I had the chance to dig into vLLM, probably the most popular inference framework for large-scale LLM serving. At a high level, vLLM’s architecture is quite clear and intuitive, but once you dive into the source code, the implementation details can feel vague and under-documented. There are plenty of guides on how to use vLLM — but not many that explain how it works under the hood. ...